Melon Headband

Background

The Melon Headband started as a Kickstarter in May 2013, pitched as a focusing and meditation aid in a all to active world (if only they knew what was coming). The device used EEG (Electroencephalography) to measure the brains activity and deduct a focus metric from the collected data. The "focus score" was then used to generate statistics over time and also control the built in origami folding game.

When the project eventually was abandoned in 2016 and the application subsequently discontinued, many customers were left with what was pretty much a silicone paper weight. Attempts were made to repurpose the device as a general EEG data collection device, but nothing compliant with LSL standards was ever made.

I came across one of these devices in a thrift shop, sitting at 49sek (4€-ish). Having never heard of the company, nor the device in question i naturally purchased it, with its complete box with all the accessories and the proprietary USB charger >:|

After finding out that the device was practically useless in its current condition, I became quite upset and decided to take matters into my own (arguably incompetent) hands.

Reverse Engineering the BLE protocol

The Melon Headband communicates with the users device using Bluetooth LE. I used the Linux Bluez utilities to connect to the device and read back its properties. All communication from and to the band is done using Nordic RF:s UART over BLE functionality. This is exposed over one service with two characteristics:

SERVICE: 6e400001-b5a3-f393-e0a9-e50e24dcca9e | Nordic UART Service

- CHARACTERISTIC: 6e400003-b5a3-f393-e0a9-e50e24dcca9e | Nordic UART RX

- CHARACTERISTIC: 6e400002-b5a3-f393-e0a9-e50e24dcca9e | Nordic UART TX

I managed to find a copy of the original Android application, and was able to decompile it using JD-GUI.

The structure of the app is actually quite interesting, and contains some quite dubious programming choices such as storing the AWS S3 Bucket credentials in PLAIN TEXT???? The application also gathers geolocation data, which is sent together with collected EEG data to the mentioned S3 bucket. Sketchy stuff but i digress...

Eventually after much digging i found the class responsible for initializing a connected device.

package com.axio.melonplatformkit;

class i implements Runnable {

i(DeviceHandle.Peripheral paramPeripheral) {}

public void run() {

DeviceHandle.Peripheral.b(this.a, "DW0308"); // send start command !! EUREKA

DeviceHandle.Peripheral.a(this.a, DeviceHandle.DeviceState.CONNECTED);

DeviceHandle.Peripheral.n(this.a);

}

}

After confirming that DeviceHandle.Peripheral.b(); does in fact send commands to a connected device:

(shortened code)

private boolean b(String param1String) { // send command to device CONVERTER

byte[] arrayOfByte = param1String.getBytes("UTF-8");

bool = a(arrayOfByte); // send command to device

return bool;

}

// where a() is the send function:

private boolean a(byte[] param1ArrayOfbyte) { // send command to device

BluetoothGattService bluetoothGattService = this.m.getService(UUID.fromString("6e400001-b5a3-f393-e0a9-e50e24dcca9e"));

BluetoothGattCharacteristic bluetoothGattCharacteristic = bluetoothGattService.getCharacteristic(UUID.fromString("6e400002-b5a3-f393-e0a9-e50e24dcca9e"));

bluetoothGattCharacteristic.setValue(param1ArrayOfbyte);

boolean bool = this.m.writeCharacteristic(bluetoothGattCharacteristic)

return bool;

}

The conclusion that "DW0308" was the initialization command could be made. I confirmed it once more by using a Bluetooth sniffer app on a test device. The band is now initialized and ready to receive instructions. No response is sent back to the client. The client now sends the "Start Stream" command "S01". The code responsible for sending this command was eventually found as well:

package com.axio.melonplatformkit;

import android.util.Log;

class j implements Runnable {

j(DeviceHandle.Peripheral paramPeripheral) {}

public void run() {

if (DeviceHandle.Peripheral.b(this.a, "S01")) { // if send start stream command is successful

String str = DeviceHandle.Peripheral.m(this.a);

if (str == null)

str = "???";

Log.i(DeviceHandle.Peripheral.b(this.a), "Device opening stream: " + str);

}

}

}

The band immediately starts spitting out the raw data on the RX line.

Now this is where the real pain started. I wrote a whooollee bunch of python test scripts to interact with the device using a variety of different python Bluetooth API:s, all of them with their own project-stopping quirks that on multiple occasions made me question every life choice i made up to this point. But alas, Bleak seemed to work (most of the time) on my Linux install, i just had to make sure that BlueMan did NOT connect to the device before the scripts did.

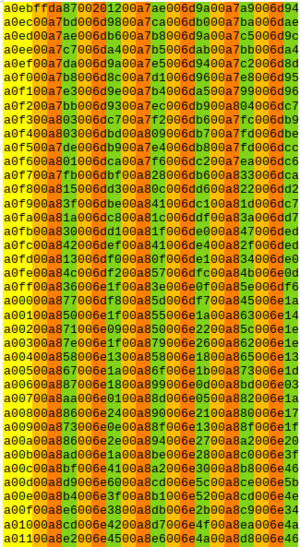

I managed to gather a bunch of packets to analyze: Now, there is a LOT to unpack here, these packets are always 20 bytes long, where the first byte is omitted (as can be found in the decompiled packet handler functions:

protected void a(short[] paramArrayOfshort) {

System.arraycopy(paramArrayOfshort, 2, this.e, 0, 18);

then we can see quite easily that the data is segmented into six parts (3 samples per 2 channels, each sample is marked as a group of "orange,red,green"). where the two first bytes (orange and red) are simply counting up from some initial value to 0xffff and then rolls over. The values (or value rather since it really is a 2 byte value) is incremented every 28-29 values, so 3 times per second, is my best guess. This is most likely done to keep track of the time, since the sample rate may fluctuate a bit. The green values are the actual samples themselves!

I then did a recording of 100 seconds, which gave 8349 packets, so 83.49 packets/sec. 83.49 multiplied by the amounts of samples (3 samples) yields a sample rate of ≈ 250 samples/second or 250 Hz rate! Which means that the maximum frequency that the device can capture is 125 Hz (see Nyquist Frequency), not bad at all.

Now in order to make any sense of this data I needed to look at the code that handles each packet and saves the resulting data to a buffer. The decompilation of this particular class was quite difficult to follow so i used ChatGPT to help me understand what was going on (my coffee-language skills are quite rusty), so that i could re-write the functionality in python.

def conversion_method(byte_string):

byte_array = bytes.fromhex(byte_string)

raw_samples = [b & 0xFF for b in byte_array]

raw_samples[:] = raw_samples[2:20]

Sample1 = [0, 0]

Sample2 = [0, 0]

Sample3 = [0, 0]

skip_bit = 0

i = 0

while skip_bit < 18:

for j in range(2):

temp_sample = [0, 0, 0]

for loop in range(3):

temp_sample[loop] = raw_samples[skip_bit + loop + j * 3]

val1 = temp_sample[0] & 0xFF

val2 = temp_sample[1] & 0xFF

val3 = temp_sample[2] & 0xFF

sample_value = (val1 << 16) + (val2 << 8) + val3

if i == 0:

Sample1[j] = sample_value #& 0xFF

elif i == 1:

Sample2[j] = sample_value #& 0xFF

elif i == 2:

Sample3[j] = sample_value #& 0xFF

skip_bit += 6

i += 1

final_sample1 = multiplier(Sample1)

final_sample2 = multiplier(Sample2)

final_sample3 = multiplier(Sample3)

return final_sample1, final_sample2, final_sample3

def multiplier(sample):

final_sample = [0] * len(sample)

for i in range(len(sample)):

final_sample[i] = sample[i] * 0.4 / ((2 ** 23) - 1)

return final_sample

Firstly the data is cropped and converted to an array of bytes. Then arrays are initialized for the three samples. The function then loops over the raw data and appends the sample bytes to temporary arrays for processing, which is really just signing the bytes using & 0xFF and then shifting them into a byte array, then adding that to the corresponding sample channel and sample iteration. Real messy but a solution that gets the job done.

The data is then passed to the multiplier() function, which iterates over each byte in the sample and multiplies it by a factor:

This part caught my eye because i genuinely have no idea what it does! My best guess is that it is a rounding function maybe? It uses the machine epsilon of a single precision float? No clue.

With the above functionality successfully ported to python, i threw together a script and was able to generate actual usable data!

Making python talk brainwaves

Jiggling python into working with EEG data is easily done using the MNE library, which contains some really useful analysis tools such as fourier transforms for the power bands (Delta, Theta, Alpha, Beta and Gamma) and various filters for EOG (Electro-ocularography) in order to remove artifacts from eye movement. I used MNE to create some nice visualizations but that's really where its functionality stopped for me, as I wanted to focus more on creating a LSL stream, so that the data can be analyzed by other software.

Lab Streaming Layer

Lab Streaming Layer (LSL) is an Open Source standard used in scientific environments to stream data between devices and software. It currently is supported (officially or unofficially) by over 100 devices, many of which are very similar to the Melon Headband such as the Muse headband.

Once i had established a reliable method for receiving packets of data from the band and was able to process the data, the integration process was very smooth. I simply had to iterate over the 3-sample group and split the 2 channels (Fp1 and Fp2), then push that to a LSL outlet.

OSC Server

OSC was very easy to implement (similar structure to LSL in how the data is handled) but in order for an OSC stream to be useful i would like to extract actual focus metrics and maybe even isolate EOG to use for eye tracking? Something similar to this.

To be continued

It turns out that analyzing raw brain data is really difficult! Who would have thought ;)

My main goal is as previously mentioned to get a reliable focus metric to pipe to many other pieces of software, and getting this metric has proved to be extremely hard.

Currently I'm trying to take a different approach to focus metrics, using machine learning. That project will get its own page once its done.